Program

Program overview and lecture material.

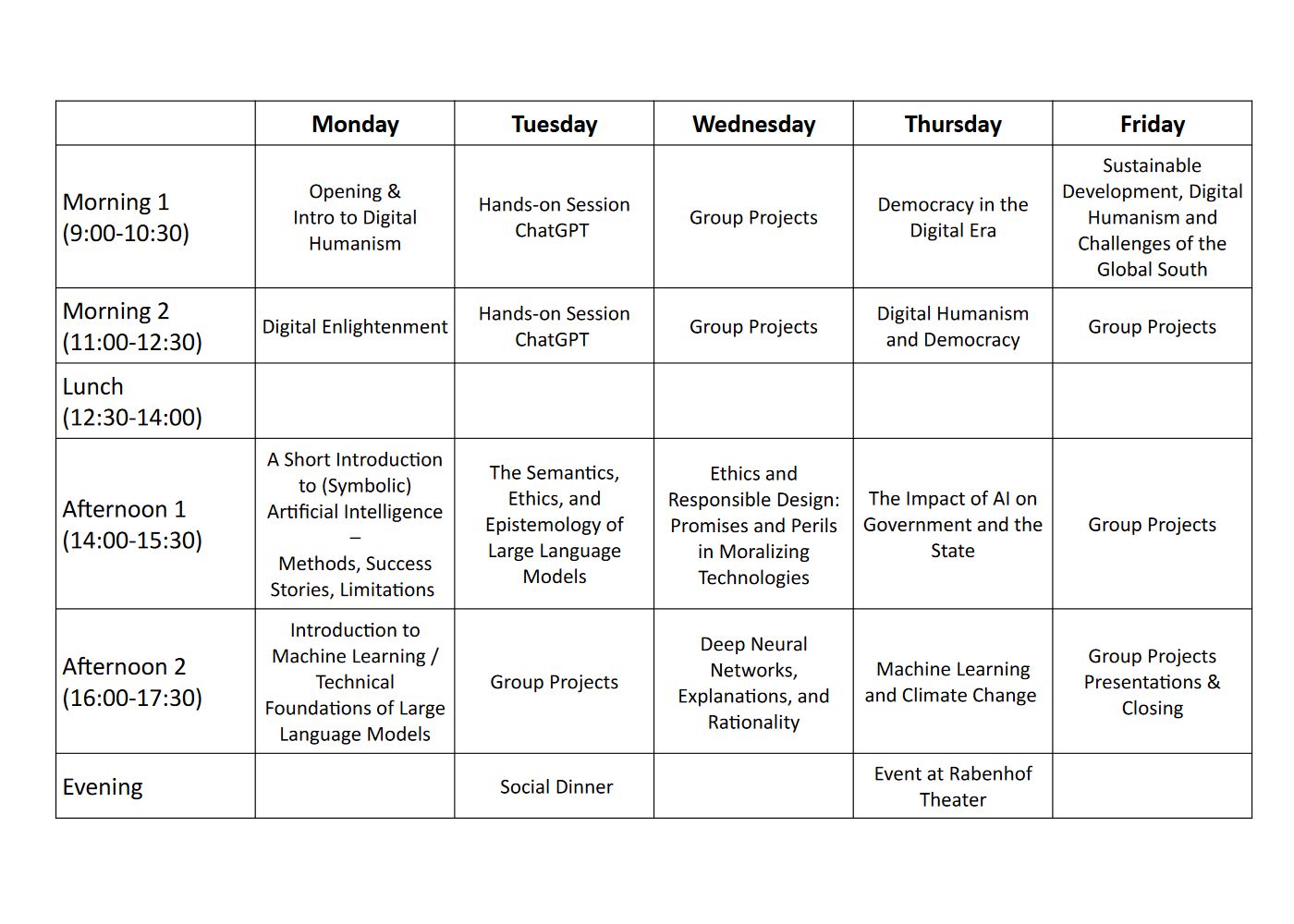

Program Overview (Times in CEST)

Detailed Program

Monday, September 4, 2023

- 8:30 - 9:00 Registration

Morning (9:00-12:30)

- 9:00 - 9:30 Opening and Welcome

- 9:30 - 9:45 Welcome Address by Dean Gerti Kappel, slides, video

- 9:45 - 10:00 Welcome Address by Enrico Nardelli (ACM Europe), slides, video

- 10:00 - 11:00 Hannes Werthner: Introduction to Digital Humanism, slides, video

- 11:30 - 12:30 George Metakides: Digital Enlightenment, slides, video

Afternoon (14:00-17:30)

- 14:00 - 15:30

Stefan Woltran: A Short Introduction to (Symbolic) Artificial Intelligence – Methods, Success Stories, Limitations, slides, video

In this lecture, we provide an overview of the most important methods in symbolic artificial intelligence. We explain why combinatorial explosion in search is a severe limitation and how algorithmic improvements can mitigate this issue in order to solve problems on the scale needed in industrial applications. We also discuss the main differences between symbolic AI and machine learning, and shall argue why the combination of both is crucial in different domains. - 16:00 - 17:30 Peter Knees and Julia Neidhardt Machine Learning / Technical Foundations of Large Language Models, slides, video

Tuesday, September 5, 2023

Morning (9:00-12:30)

- 9:00 - 10:30 Hands-on Session ChatGPT prepared by Thomas Kolb / Christian Doppler Lab for Recommender Systems, slides

- 11:00 - 12:30 Hands-on Session ChatGPT prepared by Ahmadou Wagne / Christian Doppler Lab for Recommender Systems, slides

Afternoon (14:00-17:30)

- 14:00 - 15:30

Erich Prem: The Semantics, Ethics, and Epistemology of Large Language Models, slides, video

Recent advances in large language models have raised numerous concerns including from digital humanism. In this talk, we take a closer look at large language models and how they are currently used. We start by arguing that such systems are extremely powerful while at the same time largely opaque. Large language models pose significant questions about how to best interpret them, both from a semantic and an epistemological perspective, i.e. regarding what they mean and what they know. These questions naturally link with the current debate about how to use these systems and how such systems should and should not be designed. We will discuss topics such as alignment for large language models, discourse ethics, and prohibitions of discourse. - 16:00 - 17:30 Group Projects: Use Cases including AI and Work, AI in Public Service and Policy Making, AI and Journalism, AI and Education

Franco Accordino,

Anita Eichinger,

Monika Lanzenberger,

Irina Nalis,

Wolfgang Renner,

Paul Timmers,

Hannes Werthner,

List of preliminary use cases

Evening

- 19:00 Social Dinner at 10er Marie, Ottakringerstraße 222-224, 1160 Vienna

Wednesday, September 6, 2023

Morning (9:00-12:30)

- 9:00 - 10:30 Group Projects: Participants will collaborate in groups to identify problems, generate ideas, and create solutions for the introduced use cases.

- 11:00 - 12:30 Group Projects (cont.)

Afternoon (14:00-17:30)

- 14:00 - 15:30

Viola Schiaffonati: Ethics and Responsible Design: Promises and Perils in Moralizing Technologies, slides, video

This talk focuses on the “moralization” of technologies, that is the deliberate design of technologies in order to shape moral action and moral decision making. With the help of some thought experiments, I will discuss the promises but also the perils of moralizing technologies with particular attention to computer technologies. Challenges to the moralization of technologies deal with human autonomy, the opacity of design choices and their regulation.

- 16:00 - 17:30

Edward A. Lee: Deep Neural Networks, Explanations, and Rationality, slides, video

"Rationality" is the principle that humans make decisions on the basis of step-by-step (algorithmic) reasoning using systematic rules of logic. An ideal "explanation" for a decision is a chronicle of the steps used to arrive at the decision. Herb Simon’s "bounded rationality" is the observation that the ability of a human brain to handle algorithmic complexity and data is limited. As a consequence, human decision making in complex cases mixes some rationality with a great deal of intuition, relying more on Daniel Kahneman's "System 1" than "System 2." A DNN-based AI, similarly, does not arrive at a decision through a rational process in this sense. An understanding of the mechanisms of the DNN yields little or no insight into any rational explanation for its decisions. The DNN is operating in a manner more like System 1 than System 2. Humans, however, are quite good at constructing post-facto rationalizations of their intuitive decisions. If we demand rational explanations for AI decisions, engineers will inevitably develop AIs that are very effective at constructing such post-facto rationalizations. With their ability to handle vast amounts of data, the AIs will learn to build rationalizations using many more precedents than any human could, thereby constructing rationalizations for ANY decision that will become very hard to refute. The demand for explanations, therefore, could backfire, resulting in effectively ceding to the AIs much more power.

Thursday, September 7, 2023

Morning (9:00-12:30)

- 9:00 - 10:30

George Metakides: Democracy in the Digital Era, slides, video

Different studies on the state of democracy world-wide, each using different indices and related weights, all reach similar conclusions. Democracy is “backsliding” for the 16th year in a row.

We will take a very brief look at the history of democracy as background to identifying the actual and potential impact of digital technologies, whether positive or negative, on democracy. In particular, we will use the current developments in Artificial Technology as a case study of how their positive potential can be best harnessed and their negative one limited. Possible actions to reverse the current “Democratic Recession” by empowering uses of digital technologies that enhance democratic practices while guarding against uses with negative impact via appropriate regulation will be examined along with the prevention of undue concentration of economic and political power.

- 11:00 - 12:30 Allison Stanger: Digital Humanism and Democracy, video

Afternoon (14:00-17:30)

- 14:00 - 15:30

Paul Timmers: The Impact of AI on Government and the State, slides, video

This lecture is about state sovereignty in the age of AI. AI is already a familiar friend and foe of the state. As a friend, AI helps to improve public services, is an ally in the fight organized crime, and a piece in the armor of defense of national security. AI becomes a foe when used indiscriminately (and, discriminatorily) and such that governments undermine their own legitimacy, or when used by adversaries to destabilize critical infrastructures, national security, justice, and democracy. In this lecture we will dive into sovereignty and the quest of governments for control, capabilities, and capacities in AI, that is, their AI strategic autonomy. In this context, the rise of generative AI and the prospect of fully autonomous systems have profound and not fully predictable implications for international relations, economies, societies, democratic governance, and what digital humanism stands for: human values and needs. In this lecture we will then also discuss the role of digital humanism in shaping sovereignty in the age of AI.

- 16:00 - 17:30

David Rolnick: Machine Learning and Climate Change, video

Machine learning (ML) can be a useful tool in helping society reduce greenhouse gas emissions and adapt to a changing climate. In this talk, we will explore opportunities and challenges in ML for climate action, from optimizing electrical grids to monitoring crop yield, with an emphasis on how to incorporate domain-specific knowledge into machine learning algorithms. We will also consider ways that ML is used in ways that contribute to climate change, and how to better align the use of ML overall with climate goals.

Evening

- 19:00 Event at Rabenhof Theater: Cybernetic Socialists - Computer and Revolution in Allende’s Chile,

audio part 1, audio part 2 (in German)

Friday, September 8, 2023

Morning (9:00-12:30)

- 9:00 - 10:30

Anna Bon: Sustainable Development, Digital Humanism and Challenges of the Global South, slides, video

The world is not on track achieving the Sustainable Development Goals (SDGs) -- the global objectives set by the United Nations towards peace and prosperity for people and the planet. The past few years, global challenges have multiplied and intensified. New conflicts, natural disasters, economic hardship, pandemics, degrading natural environments and a rapidly changing climate have been witnessed. Unsurprisingly, people in low resource environments are among the mostvulnerable to these threats. Technological innovations have long been considered appropriate tools for social and economic development, however, the rising influence of big tech and artificial intelligence are posing new concerns. Is it still possible to revert these trends? In this lecture, from the perspective of Digital Humanism we will look at the Digital Divide that still exists between wealthy countries and extensive regions in the Global South. We will discuss how to leveragepartnerships, collective action and grassroots approaches, cross-culturaleducation and socio-technical design, action research and the integration of various (scientific, indigenous) knowledge systems. We will search how to connect and serve people with digital technologies, e.g. through co-creation and collaboration instead of top-down interventions? nd how to do this ethically, without compromising solidarity, local agency, and human well-being? We will try to (jointly) set goals for a more sustainable, global digital society.

- 11:00 - 11:30 Hans Akkermans: The Social Responsibilities of Scientists and Technologists in the Digital Age, slides

- 11:30 - 12:30 Group Projects

Afternoon (14:00-17:30)

- 14:00 - 15:00 Group Projects (cont.)

- 15:30 - 17:00 Group Projects Presentations

- 17:00 - 17:30 Summer School Closing